AI may boost business efficiencies — but at what cost for human experience?

As our interactions with AI systems become more frequent, we risk undermining the very foundations of what makes us human, potentially losing cognitive skills, self-expression, and personal autonomy.

In the second part, we analyze the implications for a human-centered AI design.

Organizations have rushed to implement AI solutions to save time and resources. For their part, consumers have no choice but to be exposed to AI; however, they have also benefited from greater access, ease, and efficiency in obtaining the solutions they need.

But while business performance and overall consumer satisfaction may be boosted, AI solutions come with significant limitations for the users upon whom they are imposed.

A team of researchers led by Ana Valenzuela, professor in the Full Time MBA and Executive MBA programs at Esade, has examined how AI constrains the human experience — this is, people’s overall perceptions, feelings, and behaviors as they engage in interactions with technology. Their analysis, published in Journal of the Association of Consumer Research, examines the complexities at play between AI’s advantages and the potential negative impact on users and contains an urgent warning for policymakers.

The rise of the machines

Automated systems enhance the quality of business decision-making based on instant analysis of big data, leading to more accurate forecasting, improved outcomes and lower costs. For consumers, this means that the customer journey is increasingly a relationship with a machine.

While much of the AI debate has focused on societal aspects such as algorithmic discrimination, privacy concerns and potential catastrophes caused by the rise of the machine, consumer research has begun to identify psychological tensions related to how personal experiences are shaped by engagement with algorithms.

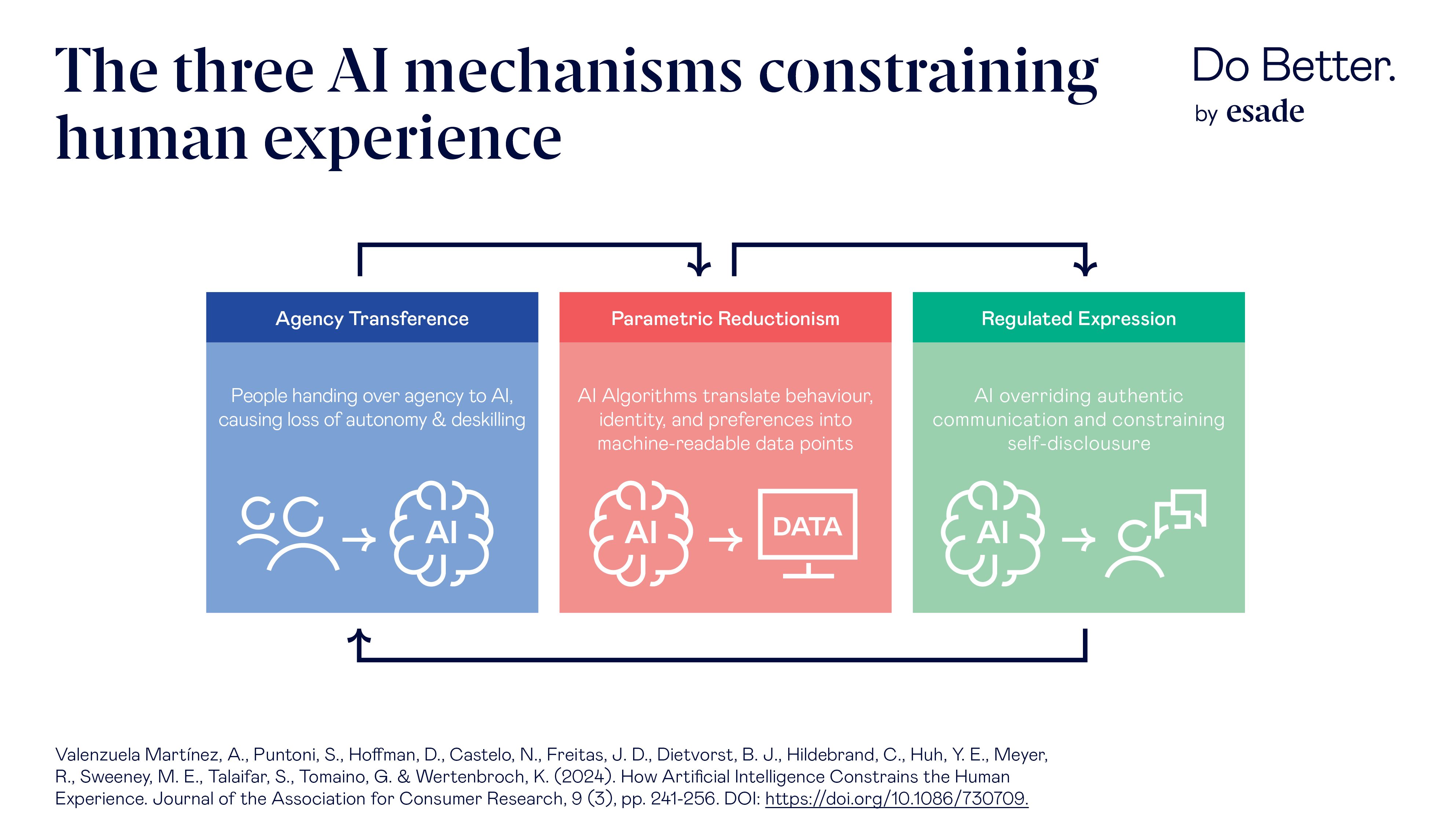

Drawing on these social and personal perspectives, the research team led by Valenzuela identified three core mechanisms through which AI constrains the human experience: agency transference, parametric reductionism and regulated expression.

Outsourced choices

As these algorithms increasingly dictate our choices (which TV shows get renewed based on reaction scores, the offers available in online supermarkets, etc.), agency is transferred from humans to AI. Decision-making trajectories are manipulated by algorithms that offer us content based on past behaviors, and the world we are exposed to is limited accordingly.

One of the constraining implications of this agency transference is the loss of serendipity. Fortunate discoveries by accident are greatly diminished by recommendation algorithms that reinforce those past behaviors and limit our ability to exploration and change.

The transference of agency isn’t limited to decision-making — it can also lead to skills being lost to AI. If the Industrial Revolution marked a steep decline in the number of people who were able to spin cotton, AI has the potential to replace the need for critical thinking and creative idea generation.

Tasks may be completed in a fraction of the time, but valuable cognitive and practical skills may end up shrinking as a result, especially among knowledge workers that rely on AI to augment some of their tasks. And the potential repercussions go beyond the loss of workplace skills — they can also affect speech patterns and social skills.

For instance, research into the link between the use of smartphone and social skills found that younger people had much lower levels of emotional intelligence, mindfulness and social competence than middle-aged and older people. Other research has noted the negative impact of the internet on memory: information doesn’t need to be retained when it’s at our fingertips.

The reductionism of humanity

The second mechanism identified is parametric reductionism. Algorithms reduce human behavior to a set of variables, parameters and formulae, with the complexities of human thought categorized and scored to predict behavior. When humans are objectified through an AI lens, biases and discriminations inevitably occur (such as the notable case of Amazon’s discriminatory hiring practices when its recruitment algorithms attributed more weight to characteristics traditionally associated with men).

The codification of past behavior results in a misalignment between what the algorithm predicts and offers, and the factors that influence individual choices. It also overlooks the fact that people’s desires are often at odds with their own goals: drinkers who want to quit alcohol may be tempted by offers based on past buying habits.

In addition, users may feel disconnected from AI systems that fail to reflect their true preferences or individuality, especially in sensitive areas.

The privacy paradox

Finally, the authors identify a third mechanism of regulated expression, which refers to the ways in which AI systems influence, alter, or constrain how individuals communicate and express themselves, both with technology and with other humans.

This comes from different sources, one of them being voluntary self-disclosure. AI relies on large amounts of data and consumers are increasingly expected to provide personal information to complete basic tasks, such as downloading an app to order a drink from a bar. Consumers may express worry over the amount of information they are expected to divulge online and via apps but then take to social media to share the most intimate details of their private lives. This privacy paradox influences how people interact with each other and with AI, and overrides the natural instincts of communication.

Another source is found in constrained interaction and self-expression. If a consumer knows they are communicating with a chatbot they will limit the information provided and modify their language. If they believe their conversation is with a human, they are more open to sharing personal details. Businesses are exploiting this knowledge to create more human-like engagement with automated systems: the more natural the conversation seems, the more the consumer is willing to share.

An urgent issue for society

As these practices that harvest and process data from users become more widespread, people are increasingly suspicious and guarded. Their expressions and communication become less natural, their demeanor more controlled.

This has a much wider impact than the protection of data: inauthentic online behavior is a predictor of lower wellbeing, shows a higher tendency towards mental health issues and creates a false perception of reality in society.

As the use of AI continues largely unencumbered by definitive rules or standards, relationships between consumers and companies are increasingly automated. Customer journey aside, the ubiquity of AI in healthcare, criminal justice, employment and more may be constraining agency, skills, equality, dignity and diversity.

Policy makers must prioritize urgent scrutiny to ensure AI empowers and enriches the human experience — rather than controls it. In the second part of this article, we delve into the authors’ proposal for a human-centered AI design.

- Compartir en Twitter

- Compartir en Linked in

- Compartir en Facebook

- Compartir en Whatsapp Compartir en Whatsapp

- Compartir en e-Mail

Related programmes

Do you want to receive the Do Better newsletter?

Subscribe to receive our featured content in your inbox.