Keys to govern AI at the board level

The adoption of AI at the board level requires a new strategic framework to ensure an ethical integration aligned with the organization’s values.

What happens inside boardrooms when technology advances faster than the capacity to govern it? Every day, technology becomes more complex and demands continuous learning. Board directors, who are responsible for actively leading through this process, now face a new challenge: AI governance.

The evidence is compelling. According to a Deloitte report, 79% of board directors acknowledge that they lack sufficient knowledge about AI, and only 2% claim to have strong expertise. At the same time, 75% of CEOs state that their company’s competitive advantage will depend on who masters generative AI first. This gap between awareness and action has never been so evident.

Nine out of ten executives say they already have “structures and processes” in place, yet only one in four organizations uses actual governance tools. This creates a clear risk for trust and regulatory compliance. It is a dangerous gap that can no longer be ignored.

In this context, the Board Guide to Ethical AI developed by the Esade Center for Corporate Governance in collaboration with IBM provides a clear diagnosis and offers concrete recommendations to address the impact of AI in this uncharted territory of corporate leadership.

AI is reshaping the rules of corporate governance

AI is no longer an operational issue; it has become a structural one. Its integration directly affects corporate strategy alignment, sustainability, human capital, the business model, and stakeholder relations. As the guide reflects, the board's agenda can no longer be addressed without considering this technology.

Its implications unfold across three key dimensions:

- Strategic priorities: AI is reshaping decisions related to investment, innovation, cybersecurity, and competitive differentiation.

- Board composition: only 8% of global board directors have technological expertise—a deficit that threatens fiduciary oversight.

- Trust: ethical management of AI influences the confidence of investors, regulators, and auditors. Increasingly, it will also be an ESG assessment criterion.

In this environment, board directors must adopt a more informed, multidisciplinary, and proactive form of leadership.

What risks must the board address?

The incorporation of AI into board deliberations has introduced extraordinary opportunities. It delivers greater agility in data analysis, accelerates process automation, and enables scalability in seconds. However, it also introduces risks that require qualified board oversight.

From algorithmic bias and discrimination to opacity and lack of explainability, AI can lead to harmful outcomes for both specific groups and the organization as a whole. Boards must promote ethical models that help clarify the reasoning behind technological decisions presented to them.

Security and privacy are also at stake due to risks such as data leaks, data poisoning, and the misuse of sensitive information—as well as the proliferation of deepfakes, disinformation, and manipulation. These risks should heighten board awareness, as any ethical or regulatory failure can result in sanctions, litigation, and loss of trust.

In the European Union, the AI Act establishes four levels of risk with corresponding obligations for companies. In Spain, the Spanish Agency for the Supervision of Artificial Intelligence (AESIA) and the regulatory sandbox— a controlled testing environment enabling the development of innovative financial technology projects— are already operational, while major international standards (ISO 42001, NIST AI RMF) continue to evolve. Keeping pace has become a challenge in itself.

Board oversight can no longer be limited to compliance; it must also anticipate ethical, social, and legal impacts.

What governing AI entails: six key areas

The guide identifies six essential domains for building a robust framework for ethical AI governance:

- Principles, policies, and processes: define values, ethical criteria, and their operational translation.

- Organizational structure: clarify roles, establish oversight committees, and ensure accountability.

- Assessment and auditing: perform initial and recurring evaluations, define metrics, and maintain controls and traceability.

- Responsible technology and development: ensure AI design is aligned with safety, privacy, and explainability requirements.

- Operating model: integrate AI into risk management, data governance, and internal processes.

- Training and awareness: elevate knowledge not only within the board, but across the entire organization.

A well-prepared board must ensure that all six domains are integrated, up-to-date, and aligned with corporate strategy.

Recommendations for board directors

The guide proposes a clear roadmap for board members:

- Establish an AI governance framework aligned with corporate values and regulatory requirements.

- Create an advisory committee or dedicated group to address ethical and technical AI matters.

- Assign formal responsibilities for oversight, auditing, and compliance.

- Implement mandatory, multidisciplinary board training.

- Strengthen data governance, ensuring quality, traceability, privacy, and security.

- Ensure transparency and explainability in critical AI models.

- Conduct continuous audits and assess ethical impacts.

- Promote an ethical culture across all business functions.

Board committees play a pivotal role:

- Audit committee: traditionally focused on financial oversight, it must now also supervise ethical risks such as data integrity and fraud prevention.

- Risk committee: responsible for developing a dedicated framework for ethical and technological risks.

- Nomination and compensation committee: tasked with ensuring that talent and incentives are aligned with AI ethics.

- Sustainability committee: must evaluate social impacts, respect for human rights, and the environmental sustainability of new AI-driven models.

The executive committee, in turn, must translate these strategic requirements into operational execution and report to the board continuously.

IBM’s holistic vision

Governing AI requires values, ethics, and strategic alignment. A holistic approach is essential to mitigate risks, build trust, attract and retain talent, and strengthen reputation.

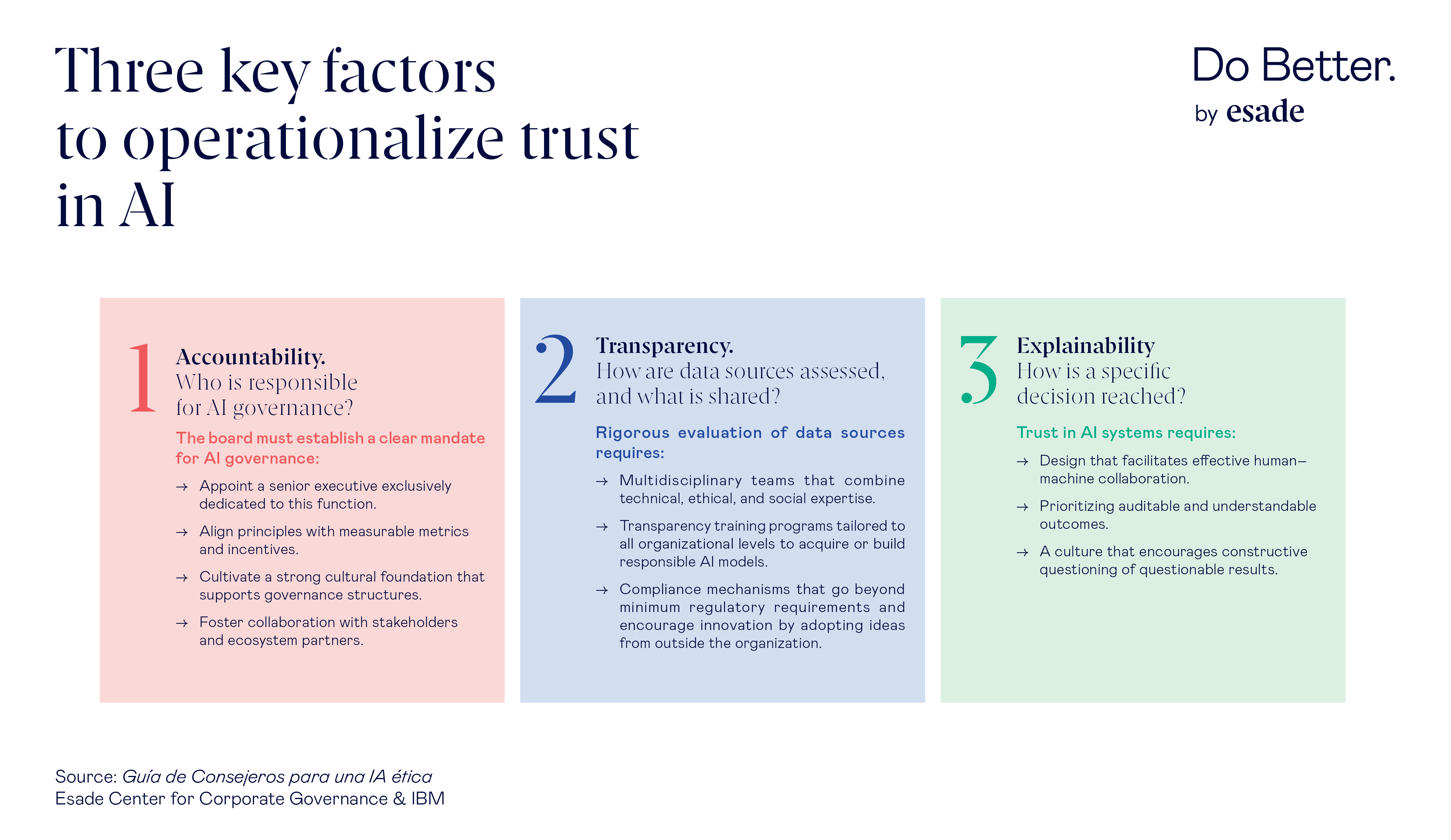

Grounded in three principles and three operational factors, IBM’s holistic approach—as its own “Client Zero”—has optimized organizational processes and demonstrates a key insight: when used correctly, AI reduces risks and creates value.

Three principles to govern AI

|

IBM’s approach provides a transversal implementation model supported by real-world evidence: it builds trust among clients and investors, attracts high-quality talent, and strengthens corporate reputation. This creates meaningful competitive differentiation and enables sustainable positive outcomes.

Leading with ethics: the new mandate

The rise of AI has ushered in a new business era, “more profound than electricity or fire,” as Sundar Pichai has suggested. It represents both an opportunity and a strategic risk.

Ensuring a positive impact requires boards to evolve toward informed, ethical, and forward-looking leadership—shifting from reactive to proactive governance. Governing AI effectively is not only a regulatory requirement; it is a source of competitive advantage.

- Compartir en Twitter

- Compartir en Linked in

- Compartir en Facebook

- Compartir en Whatsapp Compartir en Whatsapp

- Compartir en e-Mail

Do you want to receive the Do Better newsletter?

Subscribe to receive our featured content in your inbox.