AI governance must ask the right questions to protect human autonomy

Every AI system subtly guides how we think, choose, and act, quietly eroding free and informed decision-making. A new governance framework proposes actionable measures to treat human autonomy as a design property of AI.

You pick up your phone to check the time, but notice a friend has sent a link to a funny video. Half an hour later, you’re still holding your phone, scrolling through TikTok or watching YouTube videos that offer content perfectly tailored to your mood. The AI algorithms behind these online platforms have quietly learned your preferences, adjusted their suggestions, and, in doing so, have guided your attention, resulting in you watching 30 minutes of clips. On the surface, it was your choice, but it was actually a series of subtle nudges. You’'ve become unconsciously trapped in an information loop of online content. Most likely, you don’t even want to be there, but your ability to decide has already slipped away.

It may look like a rather gloomy picture, but the truth is that AI isn’t only deciding what online content we get recommended; it’s also shaping how we perceive, think, and decide. In embracing the comfort of extreme personalization — if the algorithm brings me what I like, I no longer need to look for it myself — we give up the intrinsic value of deciding for ourselves.

Online recommendations reveal only a fraction of AI’s growing influence on human autonomy. AI systems are increasingly present in our daily life—whether at work, at school, or in our leisure time—and are mostly designed for efficiency, not for reflective deliberation. They also propose constrained categories for informing our decisions and can frame ideas in a good or bad light. In doing so, they are quietly influencing how problems are defined, which options are made visible, and what forms of reasoning seem legitimate. On top of that, their outputs are based on opaque criteria that escape public scrutiny.

This is not a casual effect of the technology, but a result of organizational choices about how AI is conceived and designed. Esade researchers Paula Subías-Beltrán and Irene Unceta argue that, if we care about living in healthy democracies where citizens can actively participate in public deliberation, human autonomy should be a central principle in AI development. Their study proposes a governance framework that helps organizations understand, question, and manage how their systems affect human decision-making at every level. This framework calls for a shift in AI design: from maximizing user engagement to genuinely centering systems on users by protecting their rights and autonomy.

Their research is published in the journal AI & Society.

How AI subtly chips away at our autonomy

“AI creates a context for decision-making,” says Professor Unceta, director of the Double Bachelor Degree of Business Administration & Business and Artificial Intelligence at Esade. “Imagine yourself in a classroom full of people. The decision to raise your hand or to speak up depends not only on your own thoughts, but on everything else: the presence of classmates, the attitude of the professor... The classroom itself provides the context for what to say or whether to say it at all. AI works in a similar way: it creates the environment in which decisions are made, subtly defining which options seem available, relevant, or meaningful.”

In an increasingly technological environment, we are slowly giving away our authority to AI systems

If AI systems are shaping the context in which we define problems and perceive options, we need to ask an important question: How can we make sure that AI development takes into account the impacts on our autonomy?

Autonomy is the ability to make free, informed choices in line with our own values and beliefs. It’s not absolute independence, but rather about having control over who we are and taking ownership of what we do, and it is deeply shaped by the contexts in which we take decisions. What we don’t often realize is that in an increasingly technological environment, we may delegate our agency to AI systems without being fully aware of it. In the process, we are giving up the authenticity of our actions and our authority over them. AI systems can easily disrupt that balance without our awareness.

This has several consequences. Content-recommendation engines can reinforce existing biases. A user engaged with wellness videos may slowly be served extreme diet content; someone reading about social issues may end up in a political echo chamber that only reinforces their beliefs. In these cases, AI isn’t deliberately manipulating online users. This happens because optimization algorithms prioritize engagement over diversity of information, shaping users’ decisions along the way. In other cases, AI can drive decisions that completely ignore the users’ real needs. Automated credit-scoring systems can unfairly exclude reliable borrowers by embedding historical bias into models, and hiring algorithms can filter out qualified candidates because their CVs don’t match biased records of “successful” employees.

Autonomy is essential for democracy

In democratic societies, autonomy is not merely individual, but relational and collective: citizens form their opinions and decisions by engaging in public reasoning and deliberation. In the long term, most of the effects of AI on our personal autonomy are likely to become a threat to the collective foundations of democracy.

Human autonomy is essential for meaningful engagement in collective deliberation and public decision-making

The loss of personal agency prevents citizens from intentionally participating in public debate; the erosion of critical capacity reduces their ability to contrast ideas and form independent judgements; the weakening of one’s sense of authority undermines their confidence to engage in public matters and uphold their positions... The list goes on.

If the way AI is designed results in humans receiving less diverse information and reduced options for critical engagement, our ability to think independently and challenge dominant narratives is undermined. If people start outsourcing everyday reasoning to algorithms, then human reflection and the opportunity for dissent decreases. Autonomy is central for meaningful engagement in collective deliberation and public decision-making.

An AI governance framework to ensure autonomy

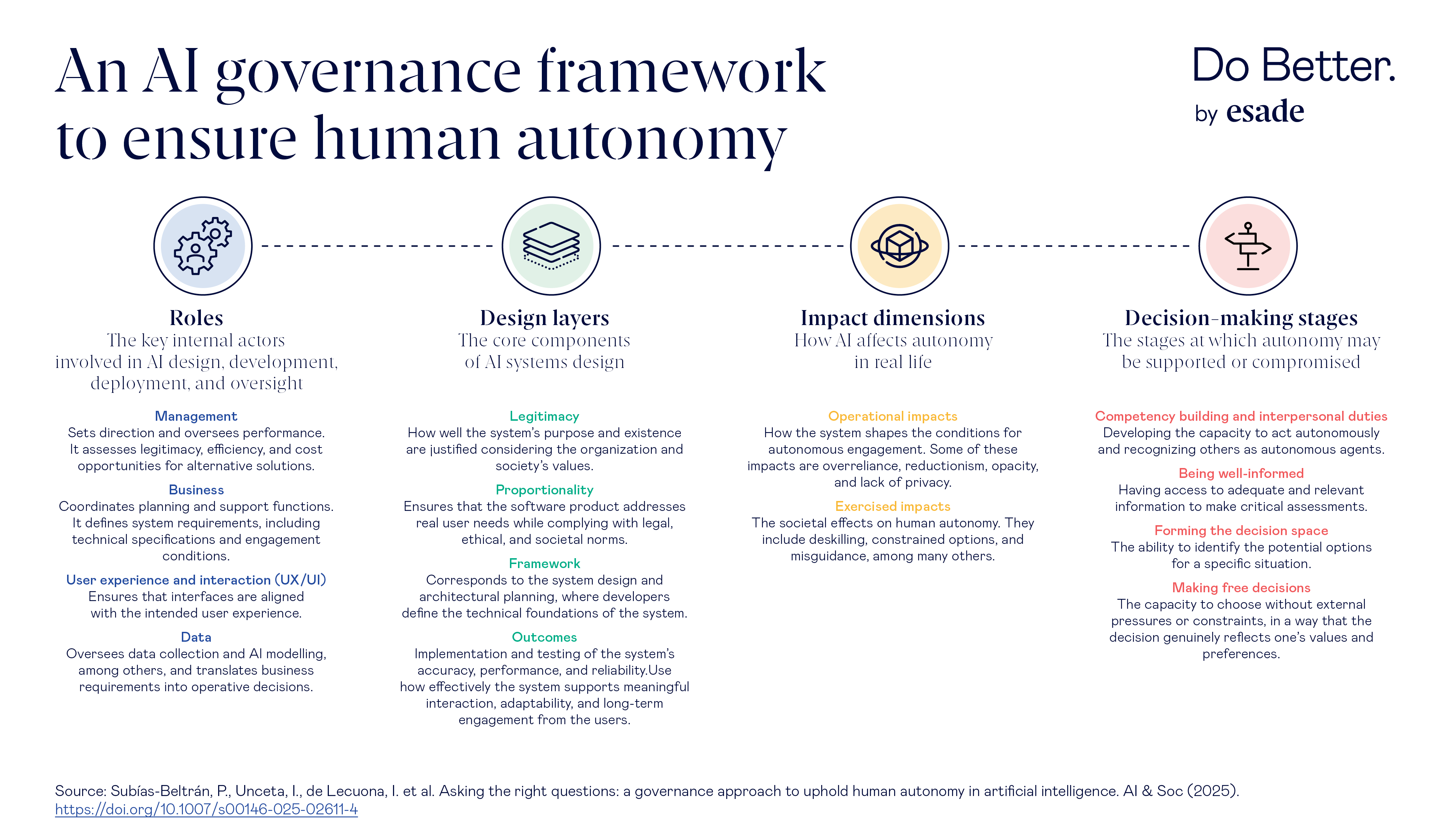

Subías-Beltrán and Unceta propose a governance framework that goes further than an ethics checklist or compliance guidelines. It’s a practical tool that uses a set of 39 diagnostic questions to help organizations identify where and how autonomy may be under threat. Each question is tagged across four axes:

Autonomy is touched upon by some existing governance frameworks, but it’s often secondary to fairness, privacy, or accountability. This new proposal treats it as a measurable design property. The objective is to make autonomy tangible, so teams working with AI can ask the right questions frequently and from the start. “Upholding autonomy is not an abstract ideal—it can and must be built into the way AI is designed and managed,” says Subías-Beltrán.

This perspective considers autonomy as a governance issue, not a philosophical afterthought. Using the framework gives organizations concrete instruments to evaluate how each stage of an AI system—from data gathering to model updates—affects people’s ability to understand, decide, and act freely.

Why we must govern AI now

There’s an urgent need to govern AI more robustly. These systems are increasingly integrated into everyday life. For instance, generative AI is already being deployed in education, healthcare, and finance—fields with profound effects on people’s lives. Each domain comes with its own unique autonomy risks: can AI tutors be responsible for teaching students? Should patients consent to diagnostic tools? Do borrowers understand automated credit assessments?

We are not without guidance on how we manage AI. The EU’s AI Act identifies human autonomy as a guiding principle, but it stops short of explaining how to operationalize it. This is precisely where Subías-Beltrán and Unceta’s work adds value: by turning a principle into a tangible process.

Who is responsible for governing AI?

Autonomy is not an afterthought. It is fundamental to democracy and a sustainable future. Subías-Beltrán and Unceta emphasize that responsibility for AI governance must be distributed, and it should not be the duty of a single person or department.

The new framework clarifies how management, business teams, developers, and designers all share accountability. This way, autonomy becomes part of daily operations—something designed, safeguarded, and constantly evaluated rather than reviewed once a year.

As the researchers note, “upholding human autonomy today is essential to secure sustainable and democratic futures tomorrow.” Their study opens our eyes to the importance of understanding how AI can be designed differently. Indeed, when interacting with AI, our freedom to choose, think, and act is influenced not only by what AI can do but by how we choose to govern it.

Header image: Yutong Liu & Digit / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/

Associate Professor in the Department of Data, Analytics, Technology and Artificial Intelligence (DATA)

View profile- Compartir en Twitter

- Compartir en Linked in

- Compartir en Facebook

- Compartir en Whatsapp Compartir en Whatsapp

- Compartir en e-Mail

Do you want to receive the Do Better newsletter?

Subscribe to receive our featured content in your inbox.